Sometimes reading a flawed argument triggers my rage, I really do get angry, a phenomenon that invariably surprises and amuses me. What follows is my attempt to use my anger in a constructive way, it may include elements of a jerk reaction*, but I’ll try to keep my emotions in check.

Dr. Epstein recently published a badly misguided essay on Aeon, entitled “The empty brain“, the subtitle makes it clear what the intended take home message is: “Your brain does not process information, retrieve knowledge or store memories. In short: your brain is not a computer“.

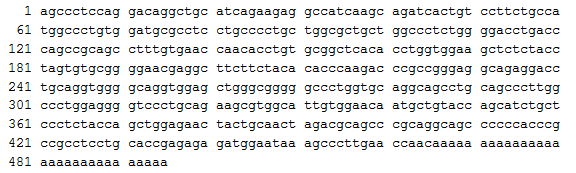

DNA as both structure and Information. From Wikimedia

Unfortunately, the essay is systematically wrong: virtually every key passage is mistaken, and yet, overall, it tries to make an argument that is worth making. Thus, I grew annoyed by the mistakes and misrepresentations (my immediate comment was “this is so wrong it hurts”), and then descended into anger because Epstein is actually damaging the credibility of an approach that I find promising, but is all too often misunderstood or straw-manned.

In what follows, I will blatantly ignore the first rule of civilised debate: I will not try to give a charitable reading of the original essay. I won’t because it would effectively hide the reasons for writing my reply. Instead, I will report the key arguments proposed by Dr. Epstein, explain why I think they are wrong, and then finish off by outlining why I nevertheless sympathise with some of the science it endorses (as I understand it).

Epstein’s essay starts by defining the overall aim:

My nonsense detectors already started to make noises: we can remember lots of stuff, so it’s undeniable that we do contain memories. Perhaps he meant that memories are surprisingly different from what we might think they are? The essay then states that:

For more than half a century now, psychologists, linguists, neuroscientists and other experts on human behaviour have been asserting that the human brain works like a computer.

To see how vacuous this idea is, consider the brains of babies.

Unfortunately, what follows doesn’t show “how vacuous this idea is” it merely re-states the point. The real trouble starts when actual computers are described:

[…]

I need to be clear: computers really do operate on symbolic representations of the world. They really store and retrieve. They really process. They really have physical memories. They really are guided in everything they do, without exception, by algorithms.

Uh oh. Dr. Epstein made it very clear that he doesn’t understand computers. In fact, computers don’t contain zeroes and ones (or images, or symphonies, or texts…), they contain physical stuff, highly organised in precise and changeable structures which can be interpreted as zeros and ones, which in turn, can be interpreted as representations of virtually anything. This point is crucial and something which I’ve discussed at length before (in the context of “brain/mind” sciences, see here and here): importantly, computers are designed to make their behaviour predictable and understandable. Because of their designed features, the interpretation of their inner working becomes relatively easy, and thus it becomes possible (not utterly wrong) to say that they “really” do operate on symbolic representations. However, this is true because we explicitly design the interpretation maps (we write the software), in other words, the symbolic nature of what happens inside our computers is true in virtue of what happens within the brains of people (who design, program and use computers), there is nothing intrinsic in a computer that makes its internal patterns of electrical activity “stand for” this or that. That’s to say that one could also make the opposite case, and point out that physical computers are just a bunch of mechanisms in motion, and conclude that computers don’t process information at all. That would be formally defensible, but absurd, right? Indeed, it would be: the whole point of computers is to process information, thus, even if producing an explanation of how they work which completely ignores any concept of information is entirely possible, it would be useless if our aim is to understand why computers behave in certain ways. Information is in the eye of the beholder, and that is precisely why it’s a useful concept. Furthermore, it is entirely possible and appropriate to describe information in terms of underlying structures.

To make the concept even more clear, let’s look at another biological phenomenon: inheritance and DNA. You can (and should) describe DNA in structural terms: things like the double helix, the shape of nucleotides, the molecular mechanisms of DNA replication, of protein synthesis and so forth. However, once all of the above is done, it is handy to also describe stretches of DNA in terms of pure information, namely the sequence of nucleotides, represented by the letters A, T, C and G. Thus a stretch of DNA can be effectively described by something like this:

The image above is a representation of the gene which encodes for Insulin. Crucially, it is this kind of description which enabled the production of synthetic Insulin and thus the production of cheaper and safer medication. My point: both a purely structural and a purely information-centric descriptions of the Insulin gene are possible. The latter is more abstract, and because of that it is frequently more useful.

In the same way, describing the inner workings of computers in terms of information makes perfect sense, but doesn’t negate that a more accurate description would involve physical mechanisms.

Back to Epstein’s essay. So far, we’ve established that his crucial point (“Computers, quite literally, process information“) is at best misleading: they do, but we may say so because it is a useful way to conceptualise how computers operate. In another sense, computers don’t process information, they just shuffle electrical charges, Information Processing (IP) is merely a useful interpretation, arbitrarily added by us, the observers.

The essay continues by remarking that historically bodies and then brains have been described by means of metaphors, employing the most advanced technologies known at a given time. Currently, digital technologies are used, so we may be entitled to predict that as technology advances, we will stop using the silly metaphor of IP and jump on the next bandwagon (also: where is the dichotomy between metaphors and “actual knowledge” coming from?) . This may be, but again, it’s a misleading way of looking at what happened: once technology started to produce complex-enough mechanisms, it became possible to conceive the idea that organisms may be nothing more than complicated mechanisms. Subsequently, once Information Theory (Shannon’s – SI) was developed, it became possible to describe dynamic structures in terms of their informational content (storage, signalling and processing). As exemplified by my detour on molecular biology, it happens that this new, more abstract way of describing stuff is frequently very useful, and thus people are looking at the inner workings of brains and nervous systems also by employing the informational metaphor. When an action potential travels along an axon, it is natural, handy and useful to describe the shuffling of ions as a travelling signal. If you do, you are already using the IP metaphor: if it’s a signal, we are already describing it in SI’s terms.

The following step should really clarify where my anger comes from. Apparently Dr. Epstein finds it surprising that neuroscientists don’t know how to describe their subject without deploying IP. He believes they should avoid IP altogether, because, according to him, it’s clearly wrong:

Few little problems here! First of all, the IP metaphor is pervasive because it’s useful, as I’ve demonstrated above. Second, I’ve never heard, and have no need to deploy such a silly syllogism. The reasoning I’m defending is that it is reasonable to interpret complex control mechanisms in terms of information processing. Brains are complex control mechanism, and therefore it is reasonable to deploy the IP metaphor when describing and studying their inner workings.

Moving on, Dr. Epstein then attempts to demonstrate that the IP metaphor is damaging neuroscience. To do so, he makes a really important observation: when asked to draw a one-dollar note, people will perform poorly if they do so without having an actual note to copy. This is an important thing to note: people can draw something which resembles the original in important aspects, but most of the details will be missing. The correct conclusion is that our brains are not optimised to store faithful representations, and that whatever it is that they store, it is usually very sketchy. In other words, efficiency and efficacy are normally favoured, accuracy isn’t. Jumping from this observation to the conclusion that the information needed to produce a gross sketch of a one dollar bill isn’t somehow present in the brain is so blatantly wrong that I don’t even know how to refute it. Unfortunately, it seems that Dr. Epstein wants us to actually draw this absurd conclusion (the “any sense” clause is deal breaker):

In fairness, Dr. Epstein then tries to make a subtler point:

[…]

no one really has the slightest idea how the brain changes after we have learned to sing a song or recite a poem. But neither the song nor the poem has been ‘stored’ in it. The brain has simply changed in an orderly way that now allows us to sing the song or recite the poem under certain conditions.

In other words, seeing a banknote, hearing a song – even more, singing a song – will change some structural element inside us, presumably in the brain. Fine, this is manifestly what every neuroscientist thinks is happening. Thus, because we can link structures and structural changes to information and information processing, we can, if desired, deploy the Information Processing metaphor. In other words, Dr. Epstein has so far proposed a number of questionable claims, peppered with one interesting observation (which manifestly refutes one of the intended take-home messages): whatever it is that our brains do store, it apparently is surprisingly inaccurate.

At this point it goes on to both promote and misrepresent a branch of Cognitive Science which I find very interesting, promising and rightly controversial, that is: Radical Embodiment.

Why do I say “rightly controversial”? Because if one interprets it as above, the whole idea fails to make sense. Hypothesising “a direct interaction between organisms and their world” means that there would be nothing to learn in studying the mechanisms which mediate the interactions and happen to occur inside bodies (would count as indirect?). In other words, it declares the reductionist approach a dead-end a priori. Trouble is, nobody does this: we do study how sensory signals travel along nerves towards the central nervous system and also what happens within brains in similar ways. The only problem I have with Radical Embodiment is that it might superficially seem to espouse such a view, while I happen to think that it tries to do something which is much more important, and orders of magnitudes more useful.

Radical Embodiment is challenging our understanding of “representations” and showing how they are far less “information rich” than what our common intuitions would suggest. It is doing so by showing how much the interaction with the world is necessary for guiding and fine-tuning behaviour. It does challenge the idea that we hold detailed models of the world and interact with those (instead of interacting with the world), and does so for a lot of good reasons, but, as exemplified in this brief exchange, it does not challenge the IP metaphor, it is merely showing how to apply it better!

Dr. Epstein goes on by citing reputable sources and even mentions Andrew Wilson and Sabrina Golonka’s blog (see also their excellent Twitter feed), which happens to be one of my favourite corners of the Internet.

This is one reason why I’m writing all this: if I’m right, Dr. Epstein is badly misrepresenting the Radical Embodiment idea, and in doing so he is unnecessarily making it look mistaken and indefensible. Far from it, it is something that is worth a lot of attention and careful study. To say it with the always thought-provoking words of Wilson and Golonka (2013), the main idea behind the movement is:

To me, it is self-evident that this radical idea is basically correct, and at the same time, it is a reason why it is so difficult to figure out how brains work. One needs to account for much more than just neurons… At the same time, while I do accept the basic idea without reservations, I am also worried that, as exemplified by the short discussion I’ve linked above, radically rejecting all uses of the “representation” concept isn’t going to work: what needs to be done is different, but perhaps something that is best left for another time.

Overall, Cognitive Neuroscience is tricky, it is prohibitively hard, and, as I argue in the introduction here, it is of paramount importance to carefully select the correct metaphors in order to convincingly describe the vast number of different phenomena occurring at different scales (from the psychological, to the neural, down at least to the molecular). In this context, expecting that at one or more of these levels the IP metaphor will prove to be useful (as it is in the case of computers) is entirely justified. Challenging the consensus is something that scientists probably aren’t doing enough, but alas, Dr. Epstein’s attempt is unfortunately failing to do so.

Notes and Bibligraphy:

![]() *It’s even more interesting to note that when I write an angry reaction the resulting posts frequently happen to be among the most popular on this blog, see for example here (with follow-up) and here. It’s also worth noting that the essay I’m criticising has collected a very high number of negative comments, see the one from Jackson Kernion in particular.

*It’s even more interesting to note that when I write an angry reaction the resulting posts frequently happen to be among the most popular on this blog, see for example here (with follow-up) and here. It’s also worth noting that the essay I’m criticising has collected a very high number of negative comments, see the one from Jackson Kernion in particular.

Wilson, A., & Golonka, S. (2013). Embodied Cognition is Not What you Think it is Frontiers in Psychology, 4 DOI: 10.3389/fpsyg.2013.00058

You write and, indeed, boldface the statement “the symbolic nature of what happens inside our computers is true in virtue of what happens within the brains of people.” OK, but how about this supplementary principle: “the symbolic nature of what happens inside our brains is true by virtue of what happen in the world.’

Jim,

This is far, far more controversial. I would tentatively agree, but I’m not even sure I’ve reached a consensus inside my own self, so would really thread carefully here. Not to say that I would avoid the subject/claim. See this long-ish essay and comments perhaps it answers your question, perhaps it muddies the waters, I don’t know. I do know it’s the best I could put together last year; don’t think I’ve made much progress since :-/.

I suppose I should preface my response with a statement to the effect “rude am I of speech.” I’m not a computer scientist or neurophysiologist, and I haven’t been in the philosophy business for forty years. I certainly can’t keep up with the level of discourse of your comment threads. My suggested additional remark—“the symbolic nature of what happens inside our brains is true by virtue of what happens in the world”— was mostly motivated by consideration what goes on when individuals try to dispense with the world either in theory or in practice. There are lots of versions of this program on the theory side from Descartes’ method of radical doubt and the phenomenologists various versions of bracketing (epoché) to the Chinese room idea and some of Putnam’s stuff on the impossibility of determining reference from inside a symbol system, but what intrigues me most is the experimental approach to what it’s like to be a brain in a bottle engaged in by the mystics. The mystics make sense of their activities by reference to a host of different philosophies, but there’s a lot more commonality in what they report of their experiences. If you make the world go away by sensory deprivation or make it meaningless by the endless repetition of the same phrases, tones, or motions, you eventually go away as well followed by reality itself, though often after a series of intermediate stage often including one during which an image of the body replaces the external world; and it remains possible, at least for a while, to make sense of one’s ideas by reference to the cosmic man and his parts, c.f. Adam Kadmon and his sephirot among the Cabalists. Hard to get away from your body, even in a monk’s cell.

My insight, if it in fact is an insight, is that the mystics in effect run the Chinese room scenario in reverse. For them, the guy in the room is in fact Chinese, and the question is whether he retains his ability to read Chinese as his normal interactions with things and people are limited to the permutations of symbols—I take it that the fascination with combinatorics that is a feature of many mystical traditions is not an accident. I think philosophy and math professors often find themselves accidentally performing this reverse Chinese room experiment. It is a very different thing to communicate a philosophical or mathematical idea when relating it involves recreating in yourself than it is when you flawlessly recite the formulas the umpteenth time. Students can sure tell the difference.

It’s not that you can’t understand things in the privacy of your thoughts, just that intentionality has an expiration date. If we’re Leibnizian monads, we’re monads that gradually leak meaning. The relative and temporary independence of organisms due to homeostasis and skin make it useful to model them (us) as separate from the world and to study the inputs and outputs between them. That’s part of the reason it’s so hard to insist on the intrinsic worldliness of meaningful symbol systems without coming off as some sort of obscurantist who doesn’t know there’s no thought without phosphorous.

Thanks! I’m so glad you wrote this and it got shared on 3QD. I was thinking of doing a line-by-line response.

I am particularly frustrated by the dollar bill example. All it shows is that some representations are fuzzy. That is no more an argument against representation than a corrupted file on a hard drive!

Consider memorization of speech or text. That can be near-perfect. Consider savants who remember the decimal expansion of pi for thousands of digits. Or people who know the entire Mahabharata. Or even a poem or song. It’s absurd to ignore these examples when you’re attempting to argue against representationalism.

The baseball example is also awful. How can a person maintain a “constant visual relationship with respect to home plate and the surrounding scenery” without invariant representations?

My pleasure Yohan.

Careful with the baseball bit: the realisation that you don’t need to calculate the trajectory, but merely keep a constant angle between you and the ball (if I’m allowed to simplify a bit) is indeed a cornerstone / game changer. Sure enough the process is a process, so it isn’t “completely free of computations, representations and algorithms”, it is however radically simpler (or at least different) than what you would expect under the “need to calculate” hypothesis.

Hmm. Interesting. I’ve heard this idea about simplicity before, but I don’t quite see it. If it is indeed simple, where are the robots that can catch flyballs? What is the mathematical implementation?

The vital computations seem to occur at a very basic level. The retinal image is not stable. The object (the ball) needs to be recognized and tracked somehow. Then the shifting background has to be factored out. The eco-psych crowd talk a lot about how optic flow “contains” all the necessary information, but it still has to reach motor cortex in order to control the limbs, right? Whatever is ‘translating’ optic flow information into a motor command is a form of computation, right? Optic flow is not in the same ‘units’ as motor pattern generation.

It also strikes me that the supposed simplicity argument leaves out the fact that catching a ball is a skill that not everyone has acquired. It takes practice. What is going on during practice? The standard computational neuroscience argument involves gradual synaptic and non-synaptic plasticity that allows for more robust representations. Somehow the non-representation argument leaves out the nitty-gritty details of creating signals that are “legible” to the motor system.

“That is no more an argument against representation than a corrupted file on a hard drive!”

Or, more to the point, than a patient or employee record. Where a payroll system asked to describe an employee, the result would be very sparse … and the vast majority of representations that computers deal with are like that. Consider, for example, how the Linux window manager figures out how to tile the windows on the screen … all it gets is rectangles. And drawing the windows isn’t done by retrieving images, it’s done by building them up out of various sparse representations of it’s components.

It’s worth noting that Epstein’s subject *could* have drawn a far more detailed image of a dollar bill from memory but chose not to include a lot of information she certainly had. Imagine being told to examine a dollar bill and that you would then be asked to draw it from memory and that you would be paid a sum exponential to the number of details you included. Really, I feel embarrassed for Jinny Hyun … it’s as if Epstein picked the most inept person in his class to make his point.

“The baseball example is also awful. How can a person maintain a “constant visual relationship with respect to home plate and the surrounding scenery” without invariant representations?”

Or without computations or algorithms? Heck, he just *described* a computational algorithm … which requires all sorts of lower level algorithms in order to implement the visual tracking and body motions that accompany it.

[All] just to help the conversation, I think it’s easier if we just leave “flat” replies and avoid responding to each other’s comment (I’m too lazy to find a blog theme that forces this and also doesn’t offend my eyes…) Sorry!.

Yohan, you are right, I wouldn’t linger too much on “simplicity”, that’s why I was careful to add “or at least different” ;-). The reason why I’m suggesting to be careful is that the point is nuanced (at least, the way I make sense of it) and it all to easy to end up in a battle between windmills and straw men. I’m with you in many senses, retinas already do a lot, and one would expect that as signals travel, at each level a lot more transformations happen. As I say this, I admit that signals are being transformed, and thus, I am embracing the IP metaphor. I do so without worrying, mind you.

However, the game changing turn that I do think we need to take seriously is also buried in what I’ve written in the main post, “efficiency and efficacy are normally favoured, accuracy isn’t”. The accuracy word does a lot of work: applied to representations, it suggests that we have elaborate and complete models in our heads, and if we do, we can then work with them to simulate stuff and do “computations” that are very much similar to what a computer does when rendering a 3D series. It turns out that this idea is either significantly or flat-out wrong (I’m not ready to commit to either possibility) and why shouldn’t be? If the mid-fielder can just look up and get a quick and accurate measure of where the ball is, why, should he bother building an elaborate and computationally expensive model of what’s going on? Since efficacy and efficiency are the guiding principles, it is very reasonable to expect that he wouldn’t.

However, this doesn’t negate that our midfielder holds “some kind of representation” inside him, at the very least, something that corresponds to “a ball is flying across the field and I want to catch it in flight” is in there, somewhere, right? As a result, I can’t jump on the wagon claiming that there is no representation and the IP metaphor is useless, but I do endorse the attempt of impoverishing the representations we expect to find to such an extent that it should make lots of people scream “well, if that’s a representation, then Richard Dawkins is a Catholic nun”. To my satisfaction, I’ve managed to get this kind or reaction at least once, in my raids across the interwebs ;-).

I hope you are getting where I stand, apologies if the above is “just” confusing.

Jim,

I’m a former (very junior) neurobiologist that can’t stop from philosophising: I probably find your own comment at least as challenging as you may find the most abstruse of mine. We’ll try understanding each other without haste (do bear in mind I will eventually become very slow with my replies, you know: life, work, etc.).

I do think you might be onto something. In fact, I like this image quite a lot. I will let it simmer in my melting pot for a while, but I’m not confident it will lead me somewhere. The fact is that we’re here immersed solidly in some sort of home-made philosophising of the analytic (if discursive) kind. In this context, trying to accommodate mysticism feels premature to me. But hey, the parallel with professors just “reciting their formulas” is delightful: an apt reward for the effort I’ve put in writing the above. So thanks for this, I hope you’ll hang around.

Don’t get me wrong: I have 1001 comments to make on the issues you are raising, and I do find them interesting. I just don’t have the resources to engage with them without neglecting more immediate questions/conversations. I do apologise.

“The accuracy word does a lot of work: applied to representations, it suggests that we have elaborate and complete models in our heads, and if we do, we can then work with them to simulate stuff and do “computations” that are very much similar to what a computer does when rendering a 3D series. It turns out that this idea is either significantly or flat-out wrong (I’m not ready to commit to either possibility)”

I think I agree here. But that isn’t the main problem with the approach. The ecological “simplification” actually assumes a representation. You ask “If the mid-fielder can just look up and get a quick and accurate measure of where the ball is, why, should he bother building an elaborate and computationally expensive model of what’s going on?”

If the midfielder can look up and know what a ball is, then there is already at least one representation: that of the ball. Object segmentation and recognition are themselves very hard problems. It is not at all clear to me how the information that is “just there” in the optic flow can be accessed by the midfielder at all. How is it possible to get a quick and accurate measure of where the ball is? There is no “ball” on the retina. There are only patterns of activity. These patterns must somehow be transformed into things like foreground and background.

Also, there are plenty of examples in which the visual system is not even involved. Jugglers catch balls they are not looking at. This can be described in terms of various mappings centered on the head, the body, the arm, and so on.

In any case, even if the ecological approach is somehow simpler, I feel like it is skirting the central issue of usable information. How does the “quick and accurate measure of where the ball is” (assuming the brain already has this estimate) reach the motor system to control the limbs and hands? In what “units” are these measures expressed? And what is going on during practice?

Also: if these ideas really are simple, where are the implementations in software? Where is the virtual robot catching a virtual ball using optic flow?

“the realisation that you don’t need to calculate the trajectory, but merely keep a constant angle between you and the ball”

Professional ball players can catch balls that they don’t have time to constantly visually track, and they can do this while rolling on the ground in order to get low enough, while sticking out a mitt at just the right place. And archers, shooters, and golf players must take both distance and wind into account in order to — yes, calculate trajectories. Basketball players must not only do this to shoot a basket, but they must also intercept shots at the right point in the trajectory while at the same time redirecting the ball to a player of their own team. Your so-called “game changer” is simply the well known fact that we use *heuristics* to solve our problems, rather than doing mathematically precise computations … but so do computer systems when the constraints of time and storage make it advantageous.

I’ve also got a 1001 comments to make on the issues you’re raising, though the reason it will take time to properly respond is not that I’m busy but that the issues are so very hard. I have to apologize for the obscurity of my remarks. Back in school I acquired the nickname Heraclitus of Aphasia and lived up to it, too, The Presocratics remain a fertile source of inspiration even though all we have left from them is fragments. I aspired to emulate ’em, but I guess I was so lazy, I decided to cut out the middle man and produce fragments right off the bat.

One note: I don’t bring up the mystics out of any particular interest in them from a religious point of view, but because whatever their motives, they engaged in what amounted to systematic psychological experimentation. Over the years, I’ve run across quite a few psychology projects whose protocols more or less parallel exercises recommended by mystics. The grand philosophical interpretations of the results of religious empiricism don’t interest me particularly, but at a less highfalutin level they did some pretty hard thinking about how selves can be assembled and disassembled and I am interest in that.

Hello, I’m certainly not a neuroscientist or have Ph.D., so I’m simple in my comments here. First, I was happy to see someone wrote a rebuttal to Robert Epstein’s article. His writing did not sit well with me.

Though my mind does not embody the knowledge you have, or others, which have posted, there were several places in his article that bothered me, as I muddled through what he was trying to say. To which I think it boiled down to . . . Using the metaphor that our brains are like computers, is faulty.

I was trying to figure out why he has an issue with the metaphor that our brains work like computers. My idea on that is the brain of man created the computer. So in a sense, wouldn’t the mind operate as a computer? But not be a computer? And isn’t it a wonderful thing that the mind could even create such a mean working machine?

It perplexed me that he would say our brains do not store memory. I thought, how can that be? Yes, my brain might adapt to and change, based on what is happening around me, but I have memories of my life—painful and joyful. How would he explain the fact that you recall people, places, tastes, smells, and touch, or hear a song, which triggers an emotional memory? Are not all those types of things embedded in our brains for recall. For example, I touch the hot stove, my memory tells me, don’t do that again, it’s hot.

He wrote, “Computers, quite literally, move these patterns from place to place in different physical storage areas etched into electronic components.” Yet doesn’t our brain file information away, like when the light is red, you stop, which eventually becomes an automatic response to stop at a red light. And I read that the brain makes double loops of information? Thus filling it away, twice. I’m I correct?

He wrote, “Computers, quite literally, process information – numbers, letters, words, formulas, images.” I thought correct, that is what they do, but also a brain is capable of processing information, numbers, letters, words, formulas, and images. If not, then how does an artist paint, or a mathematician compute math, or a writer, write? There would be no computers without the mind. Don’t we process information when we compare things? Gee, I think this is a better buy.

In speaking about algorithms he says, “Humans, on the other hand, do not – never did, never will. Given this reality, why do so many scientists talk about our mental life as if we were computers?” This made no sense, to me, as I thought, don’t our brains function by rules and don’t we problem solve, on a daily basis? I mean the brain is wired in such a way that it keeps the heart and lungs working, without us thinking about it, to keep the body alive. Sends signals to tell us when we are hungry or thirsty? If the brain had a will of its own and decided not to follow its prescribed method of operation, we’d be dead. So why can’t the brain be likened to that of the workings of a computer?

The dollar bill illustration seemed silly to me. Not everyone’s brain works the same. But I bet a dollar that if an artist was asked to draw that bill, it would have detail. And there has to be something said about putting people on the spot. The individual has a room of classmates watching, I think nervous/anxiety and such would interfere with the memory of recalling detail.

In my humble opinion, I don’t think because some use the metaphor that our brains are like computers, denotes the fact we are human beings, capable of love, relationships, and interactions with other people. No, we are not computers, or robots, but are brains function in such a way that we could say, our brains work much like a computer. It certainly is a better metaphor then the ones of the early centuries he listed.

Will that is my two sense. Thanks for letting me share my thoughts, without all the big words! LOL

I’m just getting into neuroscience, and came across Epstein’s article on 3QD. I’ve only read a few chapters of a neuroscience textbook, and a few random review articles, and even I was pissed off reading his article. So much assertion, so little content – its his essay rather that is empty.

You wrote “Jumping from this observation to the conclusion that the information needed to produce a gross sketch of a one dollar bill isn’t somehow present in the brain is so blatantly wrong that I don’t even know how to refute it.” Right! No words.

Once I read the bit on the baseball I felt a bit sick in my head, I hadn’t heard of ‘radical embodiment’ before, and I’m a little torn that I received the idea from his article. Have you got any literature recommendations for where to start with that?

Thanks for writing what needed to be written!

[…] In fact, computers don’t contain zeroes and ones (or images, or symphonies, or texts…), they contain physical stuff, highly organised in precise and changeable structures which can be interpreted as zeros and ones, which in turn, can be interpreted as representations of virtually anything. This point is crucial and something which I’ve discussed at length before (in the context of “brain/mind” sciences, see here and here): importantly, computers are designed to make their behaviour predictable and understandable. Because of their designed features, the interpretation of their inner working becomes relatively easy, and thus it becomes possible (not utterly wrong) to say that they “really” do operate on symbolic representations. However, this is true because we explicitly design the interpretation maps (we write the software), in other words, the symbolic nature of what happens inside our computers is true in virtue of what happens within the brains of people (who design, program and use computers), there is nothing intrinsic in a computer that makes its internal patterns of electrical activity “stand for” this or that. That’s to say that one could also make the opposite case, and point out that physical computers are just a bunch of mechanisms in motion, and conclude that computers don’t process information at all. That would be formally defensible, but absurd, right? Indeed, it would be: the whole point of computers is to process information, thus, even if producing an explanation of how they work which completely ignores any concept of information is entirely possible, it would be useless if our aim is to understand why computers behave in certain ways. Information is in the eye of the beholder, and that is precisely why it’s a useful concept. Furthermore, it is entirely possible and appropriate to describe information in terms of underlying structures.” https://sergiograziosi.wordpress.com/2016/05/22/robert-epsteins-empty-essay/ […]

Sergio,

I have to agree that I thought Robert Epstein’s essay was flawed but, as someone with a background in psychology as an undergrad many years ago, I thought I’d offer my 2¢.

I think that Epstein’s “dollar bill” example is absurd. I think his assertions about how computers work vis-à-vis the brain are not right.

But I think part of his argument, which he badly stated, and, with at least part of which you seem to agree, is this: computers store things (as text, images, etc.)—they’re obviously not stored as text and images on a hard drive, they’re 1s and 0s but change the 1s and 0s and at that moment you’ve, in effect, changed the text and images. Our brains are different, he’s saying—there is no real analogue to something like text and images or to 1s and 0s in our brains. Rather, having been exposed previously to a poem or a place, we have the capacity to behave in certain ways—we can recite the poem or imagine the scene but we’re enacting those things in the moment. The organism is obviously changed in that it can do now something whereas before it couldn’t—as you say some structure is changed— but that change doesn’t involve “storage.” BF Skinner said that a better metaphor in this regard was a dry cell battery—you can charge a battery and the battery will discharge electricity but no electricity is stored in the battery. (You could say that the battery metaphorically “stores” electricity but that just shows how misleading the metaphor is because there is no electricity inside the battery.) Although it seems weird to say, it’s more accurate to say a person no more stores a poem or a scene in his brain than he stores “bike riding” after he learns to ride a bike than it is to say he stores a poem or a scene like a computer might store an image or some text.

With regard to the ball player, I think Epstein might be reacting to statements like this one: “Catching a thrown ball, for example, requires a calculation of its future location that depends on the perceived velocity of the ball and on the lateral and vertical components of its initial motion.” If “calculation” means some sort of mathematical determination, that is not what is going on. It’s more that the person is moving, again in real time, with respect to the ball, and, essentially zeros in on it. We can derive formulas that describe how that person moves with respect to the ball but that person’s behavior does not involve him using those formulas, he’s just behaving with respect to the ball. It’s contingency-based—you can say there is “information” being “processed” in how the person moves with respect to the ball but it’s simpler and more accurate to just describe the person’s behavior with respect to the motion of the ball. Jackson Kenion says that “the lack of *conscious* computation is not evidence for the complete lack of computation” but I really don’t think “computation” in the way it is conventionally used (i.e., the act of mathematical calculation) is what is going on here, even unconsciously.

I think the computer/IP metaphor is really damaging at least at the level of the organism and behavior. To see what a mess it creates, you only have to look at cognitive scientist Donald Hoffman’s “interface theory of perception” in which “percepts act as a species-specific user interface that directs behavior toward survival and reproduction, not truth.” His argument regarding adaptiveness and veridical perception is absolutely right—it has to be—but his metaphor is ludicrous. My comment about it is the newest one in the comments. I won’t argue that at the level of neuroscience the metaphor is always damaging but at the level of psychology (behavior) it definitely can be.

I will say also that Epstein seems to be arguing his points from the standpoint of Chemero and those guys, who are right about a lot of things, and I’m viewing it from the standpoint of the radical behaviorists.

“computers store things (as text, images, etc.)—they’re obviously not stored as text and images on a hard drive”

Of course they are. What do you think a text or image *is*? Put your magnifying glass to this text … hey, it’s pixels! *Exactly like* what is stored on a hard drive, though the geometry is different. And what are pixels? On a CRT, there’s a cathode ray that swipes across the screen many times a second, causing spots to light up for a very brief moment … it’s all an illusion … or a representation.

“they’re 1s and 0s ”

There are no 1s or 0s on hard drives (except, for instance, in texts like “they’re 1s and 0s”.

Unfortunately, folks like you and Epstein lack even the most rudimentary understanding of the subjects you’re discussing.

Folks,

I can’t begin to explain how much I’m appreciating your comments, all of them, especially because they are so diverse! Lack or time, clarity *and* knowledge will probably mean my replies will be sketchy and generally inadequate, I fear.

Yohan:

That’s why I’ve had the brief exchange with Sabrina Golonka linked in the main post. In other words, I think you’re pushing an open door with me: I share all your worries. More precisely, I find myself half-way between two positions, feeling the urge to find a way to reconcile both [peppered with the fear that I’m missing something somewhere…]. You can read my writings on the subject with this in mind: I see a divide, and think it’s sociological more than anything. However, both sides disagree with me and would maintain that the divide is factual (e.g. the other side is wrong), and who am I to presume otherwise?

Jim:

I never for a moment thought that you where trying to sneak-in a supernatural argument, don’t worry about that. The (real or imagined) obscurity of your remarks is welcome here: stretching my mind is oddly pleasurable. Please do send your further thoughts my way, and don’t worry about timings: I am very slow in replying myself, I like to think slowly, and I blog with the intention of documenting the path of my thoughts, there is no “best by date” on any post or comments section!

Diane:

Glad you found reasons to chip in. A part of me always tries to find ways of expressing my thoughts in accessible to the not-initiated ways. It’s probably because I feel science and philosophy should be more approachable and part of wider discussions (and also pay more attention to one another), in part because I am incapable of specialising. I suppose I am easily bored, but the result is that I keep moving across different subjects and thus I am always playing the part of the noob myself. That’s all to explain why your comment, without big words, is very welcome. Please keep the above in mind when reading what follows!

You truly and completely lost me here. We can build lots of stuff, but we don’t necessarily operate as all the stuff we can build, right? Why would computers be any different? Yes, we can do maths and lots of “computer-like” things, but I’m not sure this is what you were pointing at.

Uh? Don’t know what you mean here. Do keep in mind that our knowledge of how the brain “works” is very, very sketchy. To get an idea of the sheer volume of stuff that we need to comprehend, this post of mine might help.

Jamie:

The best recommendations I can give would come from here. Tom Stafford interviews Andrew Wilson: see Q5 for an “introduction to” reading list. I note that Yohan didn’t miss this one!

Jeff:

Thanks. You raise good points, and yes, I do agree in part with Dr. Epstein’s argument. A small part, best understood as “I can see where he’s trying to go, and it’s a direction that is worth exploring”. However, and this is crucial: to go there, one needs to use all precautions, for the terrain is slippery. Thus, I was annoyed because I felt the whole essay was undermining the good intentions I ascribe to it.

For example, you say:

At the first level of analysis, you are right, but scratch the surface and the statement above becomes badly wrong. Badly! On a hard drive, there are no zeroes and ones, there can’t be, because zeroes and ones are concepts. Abstract entities. On a traditional “spinning” hard drives, you have persistent (fairly durable) magnetic dipoles. Their orientation represents zeroes and ones. It’s only when you transform them in some other way that their ability to reliably represent texts, images and “virtually anything” is revealed. It’s a tricky concept to grasp, but, as I write in the main text, it is crucial: accepting the IP metaphor without understanding the deep implications of this point is indeed very wrong. However, it is equally wrong to refute the IP metaphor by neglecting the same concept. Smells of culpable ignorance or at best wilful cherry-picking. OK, I perceived it in this way, and that’s why I got angry! Do check the various links I’ve added to the main text, they are there because they link to my previous writings and discussion on this matter (may help clarifying).

Yes, Epstein was reacting to statements like the ones you describe. Yes, those statements are wrong. I pretty much subscribe to the whole paragraph you wrote. Because of my previous point, however, the objection that is being raised has no teeth. The point is that the structures and structural dynamics that allow the midfielder to play can be described in terms of Shannon information and IP. Furthermore, since they can, lots of people think that doing so can greatly help understanding how such mechanisms operate. It is that simple, in the end. Nobody is negating that IP is used as a metaphor, it has to be, because Computations are abstract.

Finally, I don’t see why you think Hoffman’s interface theory of perception is deleterious. TBH, I don’t understand why people get so excited about it. The basic idea is that we perceive stuff in a ways that are optimised by (shaped by across-generations) utility. We don’t perceive the world as is, but a lot of work happens before the conscious level, so to allow us to receive the information that is potentially relevant to us. The analogy with a PC user interface is very apt, but it really has little to do with the IP metaphor, not in the sense that “IP metaphor = true => perceptions = desktop icons”. For me the basic idea is so obviously correct that I can’t see why anyone would even bother attacking it: take colours. The same perceived colour can be made up by entirely different combinations of wavelengths, but it’s also true that the exact same wavelengths can be perceived as very different, depending on context (see optical illusion pic here). Hoffman’s theory elegantly explains why and IMNSHO does so by focussing on exactly the right mechanisms at play. See also here.

Overall: my main problem with Epstein’s essay is that he’s trying to go in the right direction, but because he uses the wrong arguments to go there, he goes much too far, making the attempt counterproductive (IMHO).

Does the above help clarifying where I stand?

Thanks for your reply. Yes, it does clarify where you stand. I think we might agree more about what Epstein is trying to say than I took for your post. He definitely made some really bad arguments. As for 0s and 1s and magnetic dipoles: yeah, I wasn’t sufficiently precise but I think it doesn’t make any difference for the purposes of the argument.

I won’t say Epstein is arguing the following but it’s my idea, based on what he wrote, of what he might argue:

Magnetic dipoles (or whatever is on the hard drive) can be transformed in some way give rise to text and images. You can trace a line from the magnetic dipoles to 1s and 0s to the images and text files such that you can reasonably say that the images and text files “exist” in the form of magnetic dipoles, as yet untransformed. In that sense the images and text are “stored” on the hard drive.

Let’s take some behavior like the one I mentioned earlier: bike riding. A person can’t ride a bike and then learns to. When that person rides a bike again, any time, no one says he has “stored” and then “retrieved” bike riding behavior—he’s simply behaving, he’s riding a bike. Every instance of his riding a bike is a new behavior. Now obviously something in his state as an organism has changed but it’s different than the images/text example on the hard drive because “bike riding” doesn’t exist in the same way in whatever changed state there is—it doesn’t exist at all until it occurs—as we might say text/images exist on the hard drive. (To add another, possibly confusing, metaphor, an avalanche doesn’t exist and it is not “stored” in the configuration of rocks that gives rise to it—it only exists when it actually occurs. Sure, the images and text on a hard drive exist as images and text when they’re instantiated as such but they exist in a way these other things don’t in the state that gives rise to them.)

Epstein is saying that something like saying a poem or describing a scene where someone has been exposed to that poem or that scene before is more like the bike riding situation than the images/text on a hard drive situation. The poem or scene simply do not exist before they are enacted. They’re not in the changed state of the person in a way that similar to that of images or text on a computer hard drive; they are not in the changed state of the person at all because, like bike riding (or avalanches), they do not exist until the person behaves.

I am not sure you agree with my statement of the argument but I think that more fairly represents the argument in pretty simple terms. I understand that everyone is using the HD/IP thing as a metaphor but Epstein is saying it’s wrong as a metaphor. (Try to give credence to your best interpretation of what he is saying , if you can—that’s what I am trying to help you do—and, then see how it—or if—it fails.)

As for Hoffman, I have no problem, none, zero with what he is trying to argue.

But I have two objections to it, one very minor and the other major. My minor objection is that the way it is framed is so damn confusing. Hoff says “perceptions of an organism are a user interface.” Given that someone can interpret the way he is using “user interface*” as meaning roughly “something that is designed so as to be perceived in a particular way,” his cardinal tenet comes out, more or less, as “perceptions of an organism are something that are designed so as to be perceived in a particular way,” which, obviously, makes no sense. One part of one is entailed in the other—it’s like saying “Navigation is a map…” (not that that is supposed to make any sense but you get the idea). It’s just bad writing and unclear thinking (which typical of behavioral scientists—they cannot write with any precision about their own field of expertise—I say that, having been, many years ago, a very proud psychology major).

My major objection is that the whole “interface theory” idea is so unnecessary. (I realize, reading Hoffman’s paper, that he is responding to reconstruction theory, which, really, I can’t even imagine why anyone would be expounding if they paid the slightest attention to evolution. So I guess he has to make up his own competing theory to respond to that one.)

To give you an idea of what I mean, here is what Hoffman has to say:

Here’s what I would say (and, believe me, I am not trying very hard):

You can quibble with the wording but, to me, that is much more in line with what evolution actually says—seeing is just behavior that, like any other behavior, evolution acts on—and it avoids completely this added (confusing and unnecessary) intermediary of an “interface.” Hoffman’s theory is elegant in the sense that it fits the situation pretty well—if you have to come up with some additional concept to explain what is going on. But you don’t—which is why I think it’s an inelegant mess—it adds nothing to the account already given by evolution and evolution is certainly elegant enough. (If Hoffman wanted to say something like “we could call this ‘the interface theory of perception’ if we needed a name but we don’t—it’s just good old evolution applied to what the organism sees,” I’d put up with that.)

(BTW, I did read some of your other posts and they really are great. I come from a very behaviorist background—and I think on a “behavior” level, not a neurosciences level—so a lot of the thinking is new and different for me. Thanks again for your earlier response.)

*I do UX work professionally.

“no one says he has “stored” and then “retrieved” bike riding behavior”

Of course there are people who say that. Me, for instance.The fact is that a large amount of what we do is remembering what we did once before and then repeating it … this is how people learn to type (let’s see … last time I put my forefingers on the little dots on two of the keys … the f and j … so I need to do that again) or dance or drive or … … and then the storage of these behaviors migrates from the cortex into “lower”, more direct layers and we develop “muscle memory”.

“he’s simply behaving”

This is how *not* to understand anything. The point is to *explain* a specific behavior, not to merely state that it’s happening. And there is nothing “simple” about the skill of riding a bike, as is clear from something like https://www.youtube.com/watch?v=MFzDaBzBlL0

“Every instance of his riding a bike is a new behavior.”

Again, this is how *not* to understand anything. All these “new” behaviors have *something in common* … two bike rides aren’t two different behaviors the way that riding a bike and knitting a sweater are two different behaviors. All the instances of riding a bike share retrieving bike riding behavior and all instances of knitting share retrieving knitting behavior.

I’m still thinking about doing a line by line deconstruction of his “essay”…

Oh…

I notice there’s a Behaviorist here, so I’ll try not to be too insulting…

You cannot define a Human Being as a “Behavioral Organism”, when they’re Capable of Reason and Logic, above and beyond mere behaviors and feelings.

Lets looks at Linguistics, for a simple example. I’m putting these little characters on the screen. Symbols, that connect together to form words, that have Meaning, Definitions; Together they form Sentences and paragraphs that one can convey a concept from one mind, to another mind.

In and of themselves, they’re meaningless. Human Beings have a Built in Capacity(a subsystem with sorting algorithms, pattern recognition, a lexicon, etc) to ~process~ these symbols and interpret the meaning we choose to impart. Or the Reader may choose to interpret a Different meaning, based on how They feel and understand the words being used. We each have our own “private” understanding of the languages we use.

Choose. Free Will. Human Beings are ~not~ reactive, emotional, organisms, that go around merely responding to stimuli.

The symbols, of themselves, also convey no emotion. I can’t make the symbols on the screen “impart emotion” because emotion is generally governed by Biological subsystems systems that interpret Physical attributes, such as Body language, tone and volume of Voice, eye contact. Things like that.

Human Bodies are an “amalgam of Eukaryotes” (organisms) many of which function independently of the Controlling Mind… you don’t like the “Interface” concept… Well too bad. Feelings aren’t Facts. You’d know this, if you studied Cognitive Psychology, instead of Behavioral.

The Maverick Jesuit wrote an almost line-by-line rebuttal of the entire “essay” in the comments section. Pretty nice, if I do say so =)

A response regarding computers vs Humans. A lot more simplistic than what Sergio wrote, though…

Rather than equate a Human Being to a Computer, how about understanding a Computer in terms of how a Human Being is, in a simplified form? Using the examples provided in the original essay, of course… Keep those in mind.

How do folks think people come up with “inventions” in the first place? They study the world around them, and seek to improve on what Nature and the Universe creates, for their own and others benefits. Some people attach a price tag, some people don’t. With Jonas Salk, being a personal favorite of mine, I like to give information and understandings(concepts) away, for free. It’s up to you to decide what to do with it.

I used to do tech support where I have guided, over the phone “little old ladies” in installing memory (RAM) into their computers, as well as “showing” blind folks how to navigate their computers in order to correct errors they were having connecting to the internet. That’s what I did, while I was in college.

I also got my first computer in the early 1980’s. A Texas Instruments TI-99/4A. I learned how to program in BASIC (spaghetti code(kinda syllogistic)) before there was even an Internet for people to be aware of. Broke it, fixed it, learned how to use it, all before it was even possible to just ask Google for answers to my questions. I used books and trial and error.

I’m going to use stuff from those in the following examples. Keeping it fairly simple and easy to understand.

A basic computer, without an Operating System, has physical parts that do things. You know… like we have a Brain with a bunch of different parts, that do things, inside of a body, that has a bunch of other parts, that do other things. And a Guiding Intelligence that’s capable of making choices based on the information and understandings that it has. Way better than a computer. The best computer you have available to use, is the one between your ears. =)

Back to the plastic.

A computer has certain parts that one might be able to relate to a Human Being.

A processor, or more than one, that functions as the “brain” of a computer. It does most of the “critical thinking” processing information, running programs, the “Beef” in the burger, so to speak.

Multi-processing, something Human Beings are actually capable of, even though some folks often try to “prove” otherwise, not taking into account that we experience time Linearly, so we can only kinda do “one thing at a time”… we can Think and Feel more than one thing at a time, however.

Case in point. We have “5 senses” that people often talk about, as if the exist separately. Taste, Touch, Sight, Smell and Sound. Most of us have those. We also experience Emotions. A “6th sense” where combined with our Cognitive abilities, often results in Intuition. Do we experience these things, only one at a time, in linear fashion? Or are they all overlayed, on top of each other so that we experience things simultaneously?

Multi-core and.or multi-processing.

Back to plastic.

Computers also have Short term memory, called RAM(Random Access Memory) that can be accessed by the Processor(s) to store or retrieve data while the processors work on other data.

See… I don’t know ~exactly~ where I’m going with this yet… ~However~ I have “stored” the idea of “Relate how a Computer is like a Human Being” that I refer back to, while I write. That’s how I write. That’s how I write “grade A” papers in college, more or less within a few hours. I apologize for being a genius. To be honest, I think Every one actually is. We all have the same basic brains and brain structures, but school systems and misunderstandings of the Science of how brains work, and how Psychology works, often inhibit people understanding the Scientific Facts involved…

Yes, additionally I’m exposing our educational systems, behaviorism(when taken by itself), and psychiatry, for the frauds they currently are in the course of my comments. Too much Nonsense in these areas, and it’s past time people learned how to separate the differences between Facts and fictions(opinions and feelings) that sometimes Feel true, that are also sometimes presented in the Presence of actual Facts, that often seem to lend credence to the falsities they actually are.

Back to plastic.

Long Term Memory, also known as a Hard drive. We store pictures of our kids on these things.

Do we need physical pictures to remember what our kids look like? How they looked as children? and as Adults? Sometimes. Some people have memory problems, but not all people. Some folks have Excellent memories of these kinds of things. Certainly even people with Poor memories can pick their own children, as theirs, from out of a crowd.

Let us get a little Biblical, since the good Doctor decided to dismiss God, and my being a Jesuit… well… I’m allowed. It also relates back to his comments regarding “man made from clay” as if “man” doesn’t seek to imitate God (making stuff from Stardust).

“man”(Humanity, as in Human Beings. “men” too often get all misogynistic, and are often the Worst cases with regard to pretentious “authority” forcing and controlling things, rather than allowing for freedom, etc… or pretense at authority because of the letters they have after their name… Alphabet Soup. No offense, guys.)

Let us give our computer ears(Microphone) so that it may hear.

Let us give our computer eyes(Camera) so that it might see.

Let us give our computer a mouth(Sound-card and speakers) so that it might speak.

Let us give our computer systems to regulate itself, automatically (electricity vs digestive, fan vs blood, sweat and tears; air cooling in both)

I can guide someone, over the phone, by Visualizing what they’re going through, from their perspective, what they relate to me, from memories and understandings I have in my own mind. A Blind woman lacks sight, but she’ll have an audio system on her computer that “reads” the text and transmits that, as audio, that I can, in turn, interpret, as visualizations, in my own mind, and relate back to her, what things to scroll through, and click on, so we can get her re-connected to the internet.

I can guide someone, over the phone, how to find the screws on the back of her computer, how to find the right shapes, the right locations, the right little tabs, to eject the old memory, and install the new, using words and phrases, that impart meaning, with visualizations in my own mind, without having to be there, in person, to install her memory(RAM) for her.

All it requires is that I use my own mind, with patience and understanding of the Other, and the use of my own Mind in the capacities it was given, built and designed with, in the service of others, without regard to my own self.

You wanna know what the differences are between Human Beings and computers?

Human beings feel pain, they get confused, they get messed up by lies and BS perpetrated by the news and media, et al, because people ~Feel~ and ~Think~ at the same time, and relate how they think and feel, to the ways they thought and felt in the past, and correlate that to the Now; This can also be extended to Other people, though. Christ said these things; It’s in the Bible. No man is an island. None of us exist in a vacuum.

Human Beings also have Free Will. They aren’t reactive “organisms” that merely “respond to stimuli” unless they’re ~taught~ that kind of nonsense, and learn to accept it, as if it were a fact, because of the ways they’re often Hurt and Harmed if they don’t.

And far too often, those who have come before, put that same thing, on those who come after.

Too often people “automatically” ~feel~ that something is true, regardless of if it actually is or how, along with fallacious arguments to authority:

Jeff,

I am guessing we are marginally talking past each other, I get this impression from your bike-riding example. I’ll try to clarify.

There are other ways of making the following point, but I’ll stick with your action-centric view.

Say you buy a new laptop, it comes with the operative system and all, but no additional software to actually do much with it. You install (or write your own) little software to make it draw beautiful fractals (I remember those from back then!). The particular program you install/write has some heuristic side, paired with the pseudo-random number generator of the computer, depending on the precise time when you execute the program, the variables chosen to feed in the fractal-making formulas will change. The result is that each time the program executes, it will generate different fractals.

Observations:

1. Drawing the fractals is an action, it’s something the computer actually does (makes the patterns appear on the screen).

2. The instructions to perform such action(s), after installing/writing the program are now stored as a very long series of magnetic dipoles on the hard-drive.

3. Because we can isolate these series of magnetic dipoles, we can clearly see how the program itself is made of information. Information is abstract, a concept we use to understand what’s going on, but we can see how it can be described in terms of “pure” (entirely abstract) information..

4. In a very prosaic sense, we can say that installing/writing the program is what allowed to laptop to “learn” (with scare quotes) how to draw fractals.

Still with me?

If you are, we can proceed with analogies. Let’s think about a particular picture or text. For the computer to be able to display and/or print it, we need a program for doing so, and then the appropriately encoded file describing the text/picture. There are parallels with the example above:

1a. Showing or printing the image/text is an action just as drawing fractals.

2a. The instructions to perform such action(s), after installing/writing the program are now stored as a very long series of magnetic dipoles on the hard-drive. They include instructions and encoded information (the “file” to be used).

3a. Because we can isolate these series of magnetic dipoles, we can clearly see how both the program and the file can be described in terms of “pure” (entirely abstract) information.

4a. In a very prosaic sense, we can say that installing/writing the program and copying the file onto its hard drive is what allowed to laptop to “learn” (with scare quotes) to show/print the file.

In both cases, the physical difference between the laptop before and after “learning” to do a given action is neatly isolated on the hard drive. In there the changes are also neatly isolated (because we designed computers specifically to be intelligible), and are ultimately physical, structural changes. Something in the computer changed its physical properties in a very organised way (structural change), and as a result the laptop can now do something it couldn’t do before.

Last analogy: learning to ride a bicycle.

1b. Riding a bike is an action. Like the fractals, each time you do it, it’s the same action (conceptually), but the actual physical behaviour is never the same.

2b. To learn to ride a bike, you had to practice. Through practice some structure changed inside of you, and no one knows exactly what these structural changes are.

3b. Because we don’t know what structural changes happened, we can’t describe the changes in terms of pure information.

4b. Nevertheless, because of Shannon’s Information theory, we know that structural changes can, once identified, be described in terms of information.

Therefore, learning to ride a bike can be described in IP terms. This is not to say that it should be described in this way. So far I am only explaining why we have all the reasons to expect that this operation is possible. Whether this operation is useful it’s another question. My provisional answer to this latter question is that given the vast distance between molecular mechanisms and behaviour (I do assume psychological phenomena sit therein somewhere) expecting the IP metaphor to be useful at at least one “level” is a safe bet. It’s a safe bet because we already know the metaphor is very useful to describe/understand how neurons work.

We can inventively try to rescue Epstein’s essay and say, “hey, maybe he means that at the psychological level the IP metaphor is useless”. It is possible that that’s what he meant, but it is very much not what he wrote, so we are solidly in wild speculation territory here. Furthermore, besides the general aim, he gave no actual reasons to reach this “inferred” conclusion, so we are none the wiser.

Coming back to your point:

I’m assuming you now why I disagree. Indeed, “the poem or scene simply do not exist before they are enacted”, however, the information necessary to ride the bike (just the program, pure instructions) or produce (recite, write) the poem (instructions and data) is necessarily stored somewhere inside us. This is guaranteed because of how we define the concept of “information”: given the definition, this necessity is not a matter of opinion. Thus, the required information indeed must be somewhere in the changed state of the person exactly in the way images and text are stored on hard drives. The only difference is that because we designed the computer, we know a priori what is happening and thus we have very little practical difficulty in tracing the information down to its physical instantiation. In the case of brains, we have no idea of how to do this, but because we know it must be possible (assuming no magic/supernatural element) people think it’s worth trying.

So, overall, I’m glad you made the effort to re-state Epstein’s point more explicitly (I agree that’s probably what he wanted to say), but I’m afraid I’m unmoved: I still think it’s flat-out wrong.

I’m not an expert on Hoffman’s ITP, so perhaps we should drop the subject. I don’t have anything to quibble about your re-phrasing (for once!).

[All: Jeff’s quote, and mine below come from here.]

I’ll just add something based on Hoffman’s conclusion:

I think he’s right, and importantly, even without knowing anything about the specifics of “reconstruction theory”, I share the impression that at least many experts “assume that perception [directly] estimates true properties of an objective world”. Very recently Searle comes to mind, he wrote a whole book trying to defend this view: according to this review, Searle does have some interesting points to make, but he is also very clearly stating that ITP is completely wrong. That’s to say that I do see why it is worth trying to make arguments such as Hoffman’s.

Sean: wow, lots of words to go through there. After “parsing” 😉 them a few times, I get the impression there isn’t a question/argument for me to address. Perhaps you just needed to vent (just as I did). Thanks for the pointer to Maverick’s comments, would probably have missed them otherwise. (Are we sure you are two different people? 😉 )

Only comment I can offer is that the behaviouralism strand that treats the whole domain of the mental as utterly irrelevant is indeed a disgrace (IMHO). Other less radical forms have lots of interesting points to make, though.

Sergio

I appreciate your points.

And we can drop Hoffman. (I thought about it later—I might have been a bit too negative on his theory.)

I was reluctant to bring up the bike-riding example because it is, actually, a lot closer to what computers do than the issue with images, text, and all and so it muddies up the issues. I was really mentioning the bike riding example to get at the behavioral aspect of the issue we are discussing. The point was not about bike riding and IP; it was about biking riding and things like seeing and hearing. I appreciate that you are going with my “action-centric view” but maybe we are differing on what the action is. (See below.)

Rather than make that argument more explicit (I am not sure I can—and it was more of an aside), let me say something that more directly addresses the issue, which occurred to me after I wrote the comment. Here are some situations, which, if they are not what Epstein is saying, are consistent with it:

You see something, say, a dollar bill. At that moment you are in a specific state—your brain has a very specific pattern of neural activity. That state is seeing the dollar bill—or, really, that state is the identity of seeing the dollar bill. You are not creating some “internal representation” of the dollar bill in order to then see it—you are simply seeing and what you are seeing is the dollar bill.

When you visualize the dollar bill—when you see it in its absence—your brain is doing something similar to when you saw the dollar bill when it was present. Your brain can’t retrieve some “internal representation” of the dollar bill because (as we just said) there isn’t any. And it wouldn’t need one, in any case—because if the pattern of neural activity is seeing without resort to some “internal representation” when the stimulus is present, then there is no need to resort to some “internal representation” in seeing the stimulus when it is absent. All that needs to happen—and does happen—is your brain is in some similar state as when you saw the actual stimulus. What your brain is doing is not recreating the stimulus, it is recreating seeing the stimulus. The behavior is with reference to what you did previously in seeing it, not to what the stimulus is.

By contrast, a computer does retrieve some representation of an image and recreates it. In its situation it is not seeing—and no one argues that it is. But the argument (from the paragraph above) is that when a person is visualizing or recalling an image, he is, in essence, seeing—and he is doing it in a “second order” way—that is, he is recreating the conditions in which he saw before (i.e., he is not retrieving a previously stored representation). If someone resorts to an IP metaphor of storage and retrieval rather than what is actually going on (assuming the account above is true), that gives a false impression. (Of course let’s assume that there are or can be computers that do just what people do—then, sure, the metaphor works. But the point is not to justify an IP metaphor—the point is to accurately describe behavior.)

You say

OK, well, first of all, “the information necessary to [do x, y and z] is necessarily stored somewhere inside us”—I don’t know how you are defining the concept (is it in your “What the hell is ‘Information’ anyway?” post) but your post points to the Radical Embodiment Cognitivists who acknowledge “how much the interaction with the world is necessary for guiding and fine-tuning behaviour.” (BF Skinner certainly said that also.) I think you could qualify you in a way that would make it less subject to challenge so I won’t challenge that.

And I’ll accept that the “required information” is stored just like text and data is stored on a hard drive.

But the “required information” is not “like” text and data in that it is self-referential—it is about the state in which the text and data can be created. And because it’s about recreating that state, it has to have different information than the information about the text and data stored on the hard drive.

Epstein doesn’t say there is no information in the brain—he says there are no “representations” of the things people might commonly think of (e.g., words, images) that are transformed, stored and retrieved in recall and recognition tasks. From the foregoing, you can see that I think that is a fair statement.

I definitely had the impression that Epstein is trying to say in his piece that, at a psychological or behavioral level, IP is, well, at least damaging if not useless. (The guy is after all a psychologist and the stuff he is saying falls right in line with radical behaviorism, even if he is mentioning the Rad Embodied Cognitivist crowd.) If you are looking at from a neuroscience point of view, that’s a different ball of wax. I’m not trying to rescue him—I’m trying to give him the fairest possible reading with which to assess his claims and one that is in line with where he might be coming from. You say right off the bat that you are not inclined to give him a charitable reading and I am not saying you have to or you should. But I think, even though he argued his points really badly, there is something to what he is saying.

“You are not creating some “internal representation” of the dollar bill in order to then see it—you are simply seeing and what you are seeing is the dollar bill.”

What does “seeing” mean here? The fact is that there’s a huge amount of processing and *representation* necessary for light reflecting off a dollar bill in the direction of your eyeballs to result in the cognitive recognition that there is a dollar bill in your field of sight. Without understanding that, nothing else that you write is reliable. In order to draw a dollar bill, you have to draw upon some of the same processing modules and representations stored in your brain … and some of that can be tracked at a gross level via brain scans that show areas of activity.

“By contrast, a computer does retrieve some representation of an image and recreates it.”

How does the Windows OS draw a “window” on the screen? It’s not by retrieving some representation of an image of the window. How does do automation studios produce animated films? It’s not by retrieving representations of images of trees, houses, people, etc. These things are *modeled* and *encoded* … which is the same thing that brains do (which is not to say that the models or encodings are the same or even similar). The problem that both you and Epstein have is that your own models of how brains and computers work are too sketchy, too flawed, to get a handle on these issues.

“Epstein doesn’t say there is no information in the brain”

Yes, he certainly does: “But here is what we are not born with: information … Not only are we not born with such things, we also don’t develop them – ever.”